In the actual production process, depending on the temperature of the workshop, the physical dimensions of objects such as robots, robotic work objects, etc. change, as metals expand (or contract) and electrical efficiency changes.

Little by little, these mechanical and electrical variations (or errors) accumulate and eventually affect the accuracy of the entire robot. Robot accuracy is the ability to reach a point in space according to a program. Users cannot achieve 0.1mm repeatability with their robots, i.e., they cannot accurately position a part with 0.1mm accuracy, because they are overestimating the robot's true accuracy - its ability to get back to a point in space as needed.

Vision-guided robots (VGRs) are equipped with an industrial camera connected to a computer that runs image-processing software to determine the offset of the robot's controls, providing a proactive method of offsetting this offset by comparing the robot's position and deviation from the expected position in 3D space. But machine vision can also produce cumulative errors due to a number of factors, from internal factors such as variations in light and sensor response to external variations such as surface finish and differences in material placement due to gripping systems.

Unfortunately, most end-users do not understand the root cause of accuracy and repeatability or how to calculate the cumulative error of the robot and vision system during the creation of a VGR solution. Selecting the right machine vision system based on the actual cumulative error of the robot requires extensive experience in machine vision and robot programming before the full project specifications can be clarified.

seek common ground while holding back differences (idiom); to agree to differ

Every VGR application is different because every robot, environment, manufactured part and process is different. As a result, there are many ways to solve the problem, but the ultimate goal remains to enable the system to perform a specific task at a specific rate, with the desired parameters and at the lowest cost.

All of this is based on a comprehensive and thorough understanding of the application requirements. What does the part look like? What are the dimensions, texture and orientation of the part for the robot in actual production, not just described in a CAD file. What are the environmental conditions on the shop floor, from changes in temperature to changes in light? What kind of manipulation does the robot need to perform on the part and how does this type of manipulation affect the robot's choices, including speed, force, and the quality of the part and its effect on the robot's positioning?

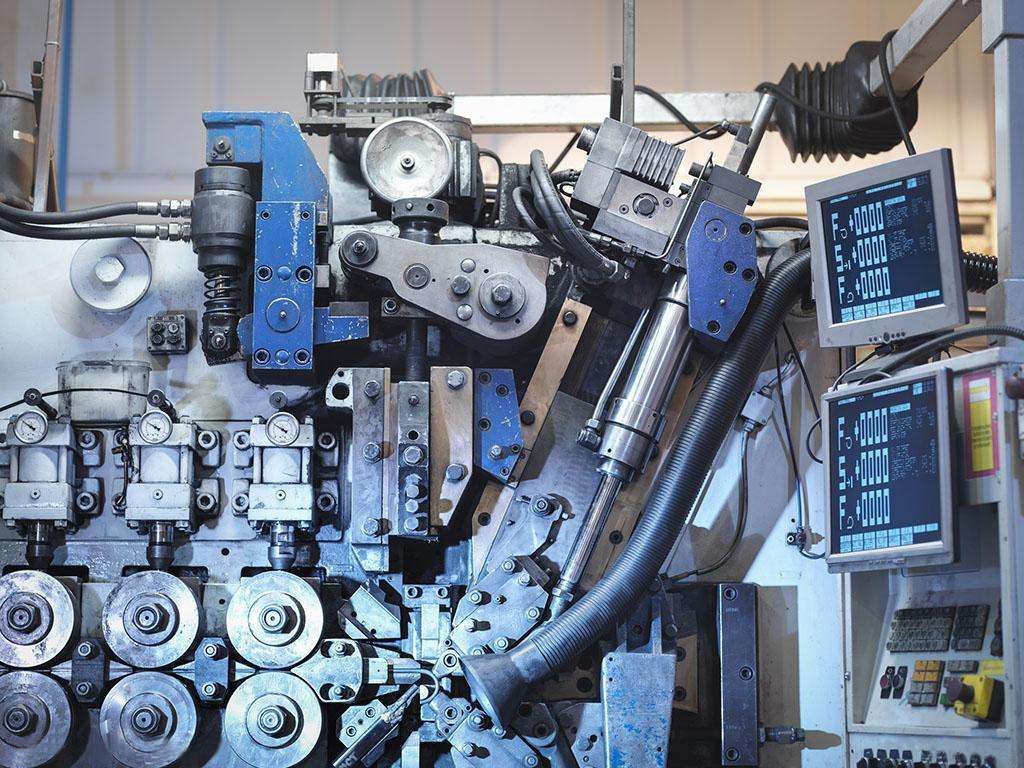

As shown in the figure, in the example of a robot placing a product such as a door frame onto a shelf, the position of the part often changes despite the carefully designed padding and mechanical fixtures already in place. A machine vision system can account for positional deviations and ensure that the robot completes the placing or picking task on the shelf.

With this information, most users will be able to get a general idea of what is available from a particular robot OEM installed on the shop floor. Depending on the part and the need for part location, an experienced designer will be able to select the right robot model for the project application. A robot is basically a kinetic model that includes a unique mechanical structure and electrical (or hydraulic) controls. Most robot OEMs offer an absolute accuracy service to determine the absolute accuracy of each robot, and this information can be very useful when the cumulative robot and vision errors are very close to the material holding accuracy and repeatability needs of the project.

After defining the project requirements and selecting the appropriate robot, the designer must program the robot to perform the desired task. The robot needs to be able to locate the part being fed into the workspace, either by using fixtures to continuously provide the robot with a 3D orientation of the part, or by using a vision system to locate deviations from the standard robot trajectory to determine deviations in the orientation of the part.

Today, more manufacturers are using vision systems rather than fixtures, which require customization, don't have the flexibility to respond to multiple parts on the same assembly line, and can't reuse the robotic cell at other locations in the plant unless additional investment is made. Machine vision systems can be reprogrammed, and if the robot's components and systems can be tailored to meet the specific needs of a new application, it can be reused in the factory just like any other piece of equipment.

Adding Visuals to the VGR

Once the application is clearly defined, the next step is to determine what kind of information the robot needs from the vision system to meet the desired requirements. Is a 2D vision system sufficient if relatively flat parts are being transported on a flat conveyor belt? Does the project application require orientation and relative height information as an aid to X- and Y-axis information - therefore, is a 2.5D vision solution required? Or for hole inspection applications, is absolute 3D information needed to provide a description of the clamping lugs and clamping points on the part?

2D and 2.5D solutions are relatively simple and can be solved with a single camera if every pixel in the field of view is capable of minimal spatial resolution. For 3D vision, designers have a variety of options, including single-camera 3D, single- or multi-camera 3D with structured light triangulation, and multi-camera stereo vision. Each approach has some advantages and disadvantages. For example, single-camera 3D solutions are very accurate in relatively narrow fields of view, but require multiple images to construct 3D point coordinates. Stereo vision is very accurate for large fields of view, and accuracy can be further improved with structured light sources, such as raster projection devices, LEDs, or laser generators, but additional hardware is required. All of these systems rely on frequent calibration to ensure that pumps, thermal expansion, and other factors do not introduce errors into the 3D data.

Little is known about the effect of light on machine vision systems. Light, or more accurately light variations, can greatly affect a machine vision system, regardless of the accuracy of the vision solution. Light is often the last factor to be considered in a vision system, but it should actually be considered early in the design process, as the camera's perception of the interaction of light and parts is fundamental to a successful machine vision solution.

For example, if your shop has windows, infrared light is not preferred because sunlight is most strongly distributed in the red and infrared parts of the visible spectrum. In order to select the most suitable light color (white, blue, yellow, red, etc.), it is necessary to have an understanding of photophysics and optics. does the VGR shop need to detect very similar colors on the parts, e.g., does it need a color camera and colored light? Or is the difference in color large enough that a grayscale camera with a band-pass filter and complementary light can be used, since this solution requires less data to be processed and costs less.

It's hard to know everything there is to know about matching colored light lighting in a nutshell, but a basic rule is: don't use a light source that is similar to the ambient light in the room, and don't use a light color that is the opposite of the part's color because the part will absorb that color of light (unless you're considering using a background light or dark field lighting).